State of a MediaStreamTrack

Table Of Contents

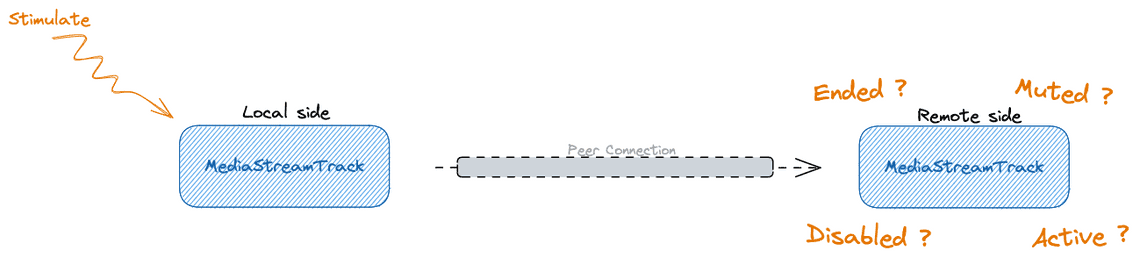

The MediaStreamTrack interface defined in the W3C document Media Capture and Streams is one of the key interfaces to understand when developing a WebRTC application.

This interface represents a media that originates from one media source such as a microphone or a webcam. From this interface, the application has access to the media whatever it comes from a physical or a virtual device, a generator such as a file or a canvas or from a peer connection.

But even though this interface seems to be easy to use, the MediaStreamTrack interface offers several properties that manage its global state. I spent some time understanding how to interpret its values and respond to changes.

I was interested to look at the state of the track when doing actions locally as well as understanding the consequences on the remote side if they exist.

As usual, it is not as easy as one might imagine…

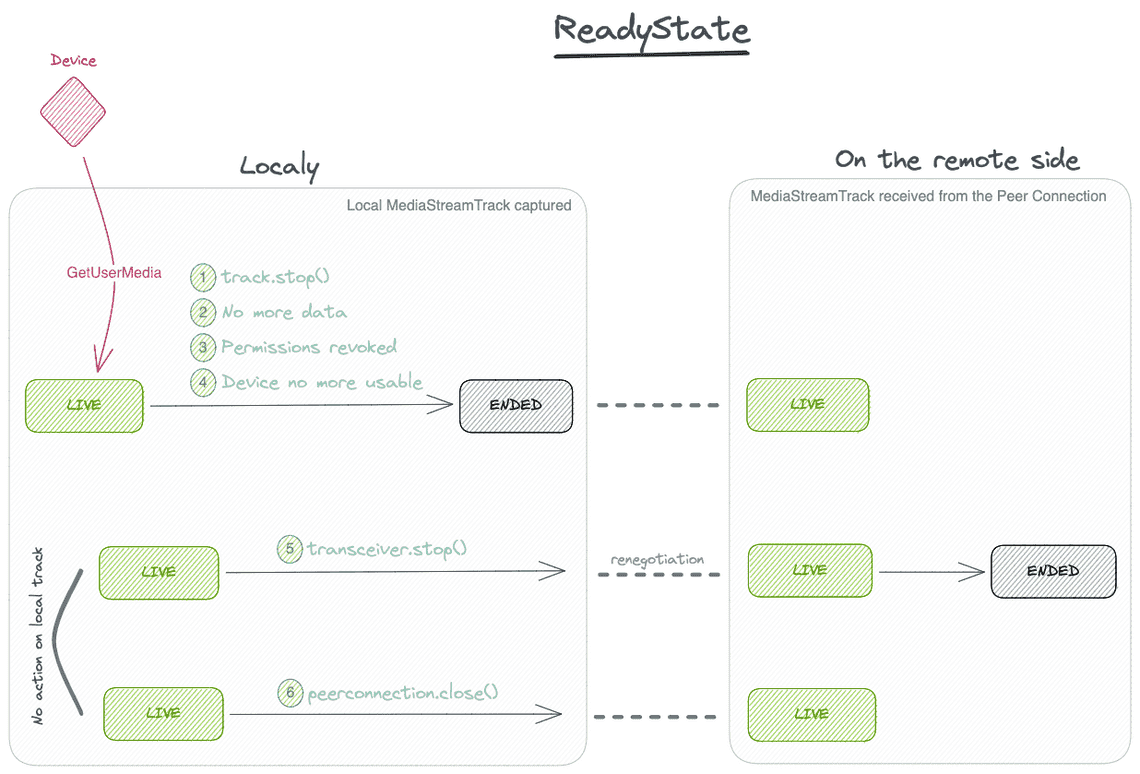

ReadyState: The track lifecycle

The first thing I would like to understand with a MediaStreamTrack is its lifecycle.

Here, it seems to be simple because there are only 2 states: live and ended.

When in live the MediaStreamTrack is able to render the media. At the opposite, when in ended, no media will be carried by this track. This is permanent, whatever happened to the track. So, no media will be rendered anymore from this track. Never.

This can happen in several ways:

The user stopped the source: This is the most common way to switch to

endedThere was no more data available from the source

The user revoked access permissions to the device.

The device generating the source data has been disconnected, removed or ejected, or is in trouble.

To access to the state, the MediaStreamTrack offers the property readyState that exposes the current value.

I tried to resume how this readyState evolves on both side depending on actions done.

From this diagram, there is 3 things to notice:

Direct actions done on the track or on the media source changes its state

Indirect actions done on the

RTCPeerConnectiondoesn’t affect the state of the track which is normal because theRTCPeerConnectionis just a communication medium and so the track can continue to live without it.On the remote side, the track is only affected when the transceiver associated to the sender is stopped. Be careful, Closing the

RTCPeerConnectionon local side has no effect on the track on remote side.

This last point could lead to some strange behavior on the application if you don’t rely on other events to update it. I was expected than the remote track moves to ended as there is no more data available. But browsers didn’t implement like this.

The fact of not having a mirrored track on the remote peer is for me a source of confusion.

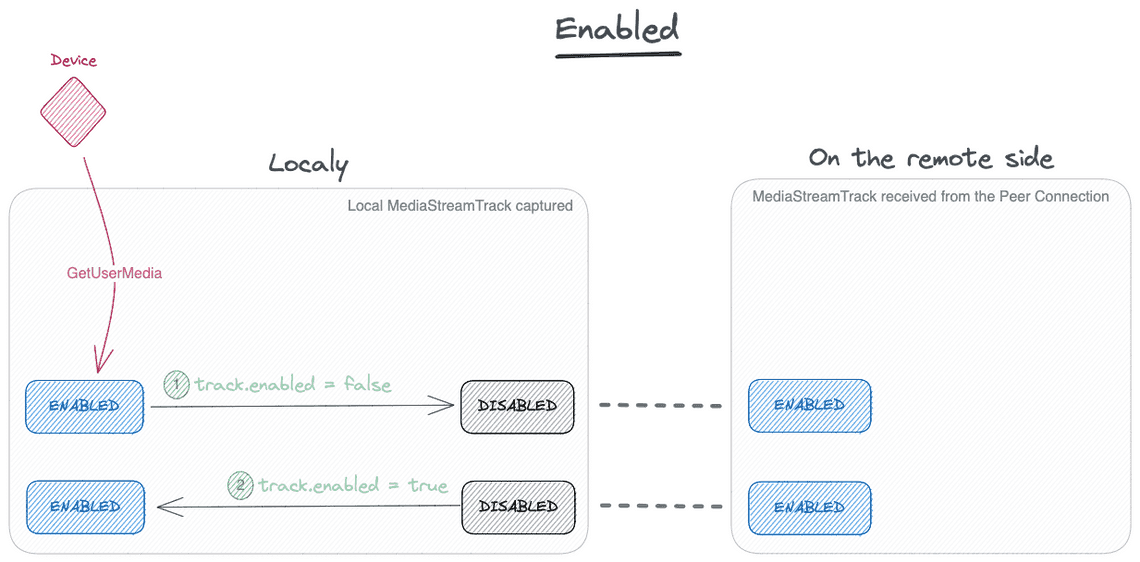

Enabled or disabled

When the track renders audio or video, it is enabled. In the MediaStreamTrack there is a property enabled which is equals to true when the track can render.

The application has the possibility to ”mute” the track by putting this property enabled to false. The track is then disabled. When disabled, the track output renders either black frames (video track) or silence (audio track).

Be careful, for video track, the light indicator of the webcam is not switched off when the track is disabled.

As shown in the diagram, the remote side is not aware on this change. The track on the remote side stays enabled whatever the change done on the local side. That’s why, this way to mute/unmute requires some additional signaling messages to notify the remote application.

So, I don’t know what to do with this property enabled for tracks received from a RTCPeerConnection.

Again here, the value of this property is not replicated on the remote side.

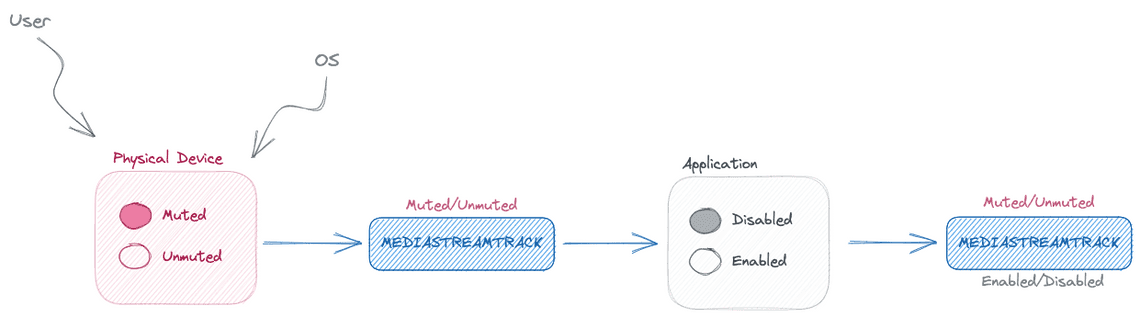

Muted or unmuted

When a track renders audio or video, it is unmuted. This is another property muted of the MediaStreamTrack interface which is equal to false when the track can render.

The difference here is that this property is mostly outside the control of the application. There is no API to directly change this value. Only a getter.

Muted is different that enabled. The muted property indicates whether the track is currently able or not to provide media output.

In most cases, a muted track can be obtained by pressing the physical mute button on the device or for microphone by changing the input volume of the device to 0 from the OS. In these cases, the local track becomes muted.

As seen for the enabled property, when a track is muted “physically” or “externally” on local side, on the remote side, there is no change and no event is propagated.

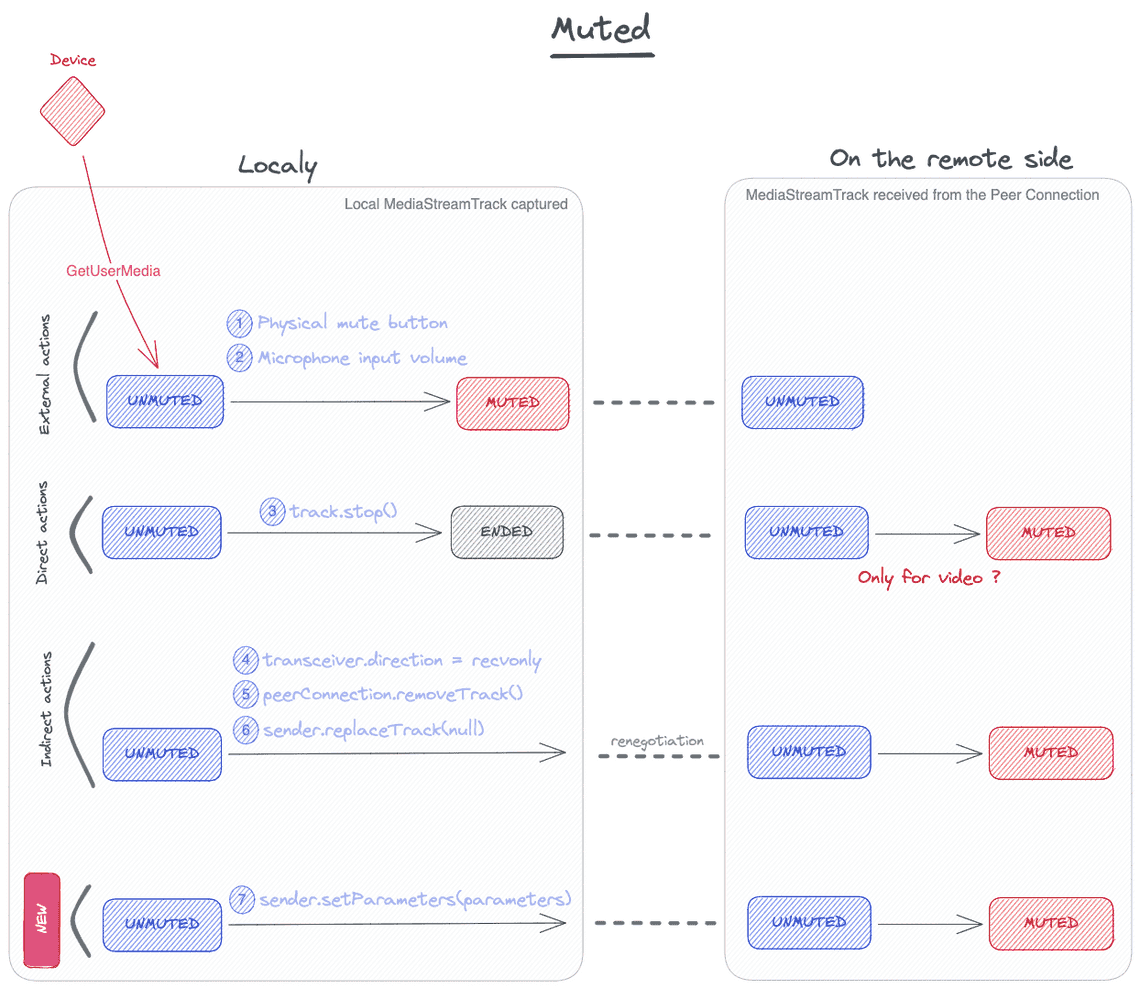

This diagram summarizes the actions that impacts the property muted locally or on the remote side:

External actions which are done on the device or on the OS modify the local track without having any influence on the remote side

Direct actions on the track such as stopping it has a strange behavior or this is perhaps due to the device itself but in my test, stopping the video track changed the remote track to muted whereas it was not the case for audio.

Indirect actions on the track using the ORTC API and a renegotiation changed the remote track to muted

And finally, using the

setParametersfrom theRTCRtpSenderinterface to prevent the encodings from being sent (by setting theactiveproperty to false) changed the remote track to muted. No packet is sent when muted but remember that the camera is not switched off during this period.

Using the

setParametersis interesting because it has no influence on the local track, it doesn’t need to remove or replace the track but just by modifying the encodings of that track, it mutes the remote track.

The global picture

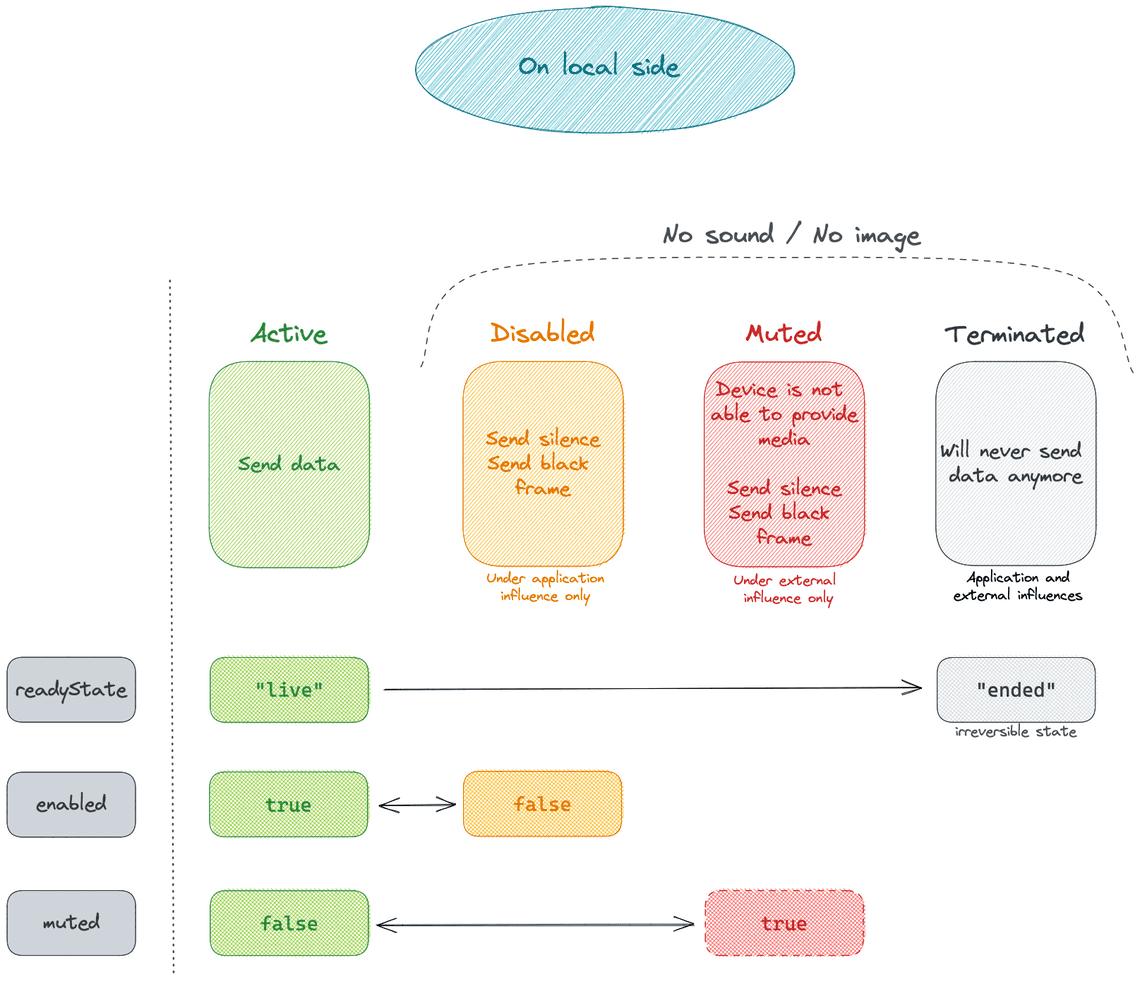

I preferred to separate and have one global picture for understanding the state of a MediaStreamTrack locally and another for the remote side.

On local side

On the local side, the state of the track is quite clear.

4 states can be differentiated grouped into 2 categories:

When the track is able to provide the media: this corresponds to the state Active where

readyState= “lived”,enabled= true,muted= falseWhen the track is not able to provide the media: this corresponds to the 3 other states Disabled, Muted and Ended

Virtual devices will be Active most of the time and can be difficult to detect without using other methods such as discussed in this article Do you hear me?

Whatever it happens outside the application, the track is aware thanks to the onmute and onunmute events.

In the application, direct actions on the track such as changing the enabled property or calling the stop() method change the state of the track directly.

Here, except virtual devices, if the track is active, the application should receive the media. And when in the other cases, the application is able to react by checking the internal properties and events received.

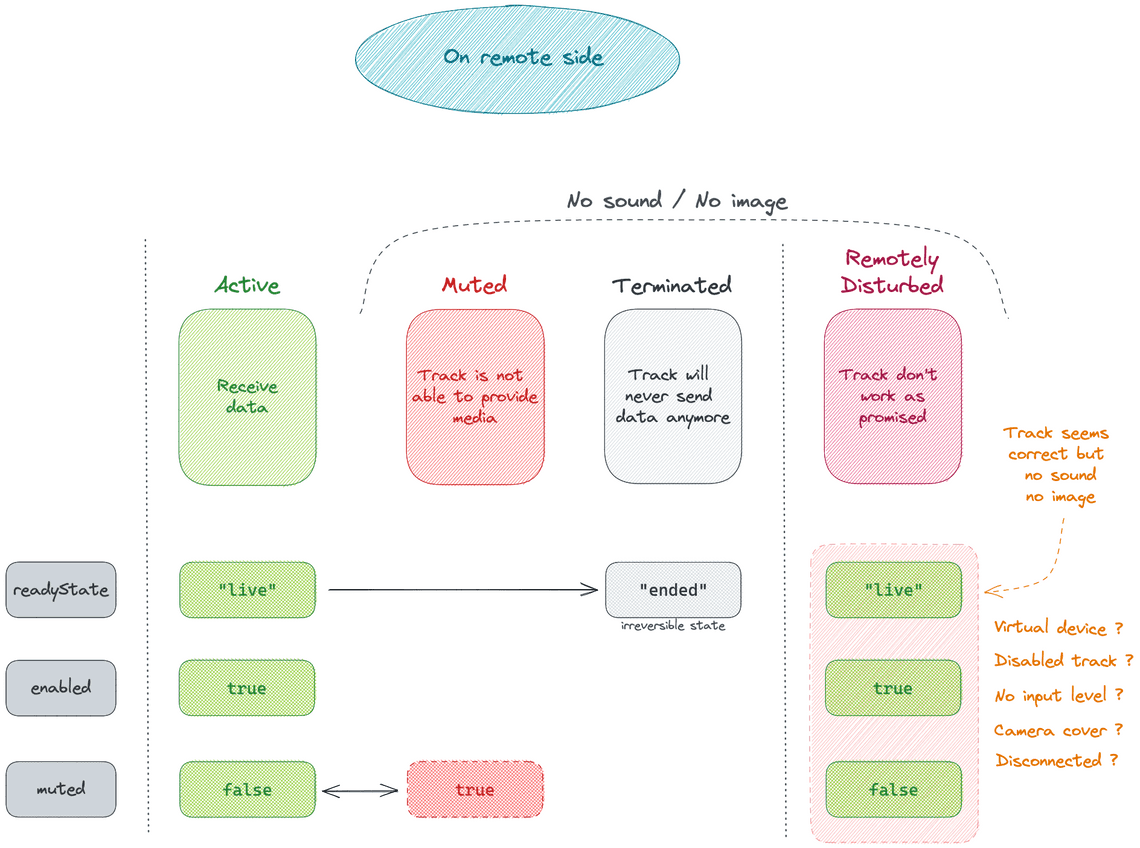

On remote side

On the remote side, things are a bit different.

I have retained 4 states grouped into 2 categories:

When the track’s state is predictable: Muted and Ended. From these steps, there is no confusion about whether or not the track can provide the media. If the track is in one of these states, no media will be provided.

When the track’s state is unpredictable: Active and Remotely Disturbed. Concretely, this is the same state because, all values associated to properties

readyState,enabledandmutedare the same in both states. When here, the remote side application needs to rely on other information (sent through the signaling layer) to correctly interpret the track state. Because, when in the Remotely Disturbed state, all properties seem to indicate that the application should receive the media but for the user point of view, things are different…

The state Remotely Disturbed covers cases that were forced by the remote application such as when disabling the track or unforced such as when something goes wrong on the remote side.

Note: Other APIs such as the WebRTC Statistics API, the Audio API or the WebCodecs API could be used to give additional information about the incoming media.

MediaStreamTrack orientations

Some evolutions are being discussed within the W3C, like the possibility for the application to adapt to the shared content when it changes. For this particular case, the W3C has in mind a new paused property to have the possibility to make change and then continue streaming. The summary and the proposal are available in the slides from the last W3C WebRTC Working Group Meeting

Another evolution concerns a new configurationchange event that could be triggered from the track itself when its configuration, such as a constraint, has changed.

Additionally, the problem of the double-mute (in app + device) has been opened for some time but no consensus has been yet reached due to the privacy issues involved.

Conclusions

Even though the MediaStreamTrack interface is used in all WebRTC scenarios today, there are potentially use cases for me that are not clean. The fact that the remote track could be considered unsynchronized with the local track is a complicating factor.

Therefore, in most cases, the signaling layer is used to send additional messages that makes sense of what just happened (hard to live without a good signaling layer…).