WebRTC API Update 2025

Table Of Contents

For the first article this year, I’d like to begin by thanking all of you who continue to read these pieces and to wish you a happy new year.

It seems these already sent well-wishes have gotten lost in the network. I know because happily I received a NACK, I’m retransmitting them now. 😆

I’ve taken some time to review both the recent and upcoming API changes. Here’s a quick overview of what’s new and noteworthy!

Was 2024 a boring year for WebRTC APIs?

In 2024, no entirely new WebRTC APIs have surfaced.

Instead, browsers have used the time to refine existing APIs and align them more closely with the official standards—an approach that ultimately benefits developers and end-users alike by improving stability and consistency.

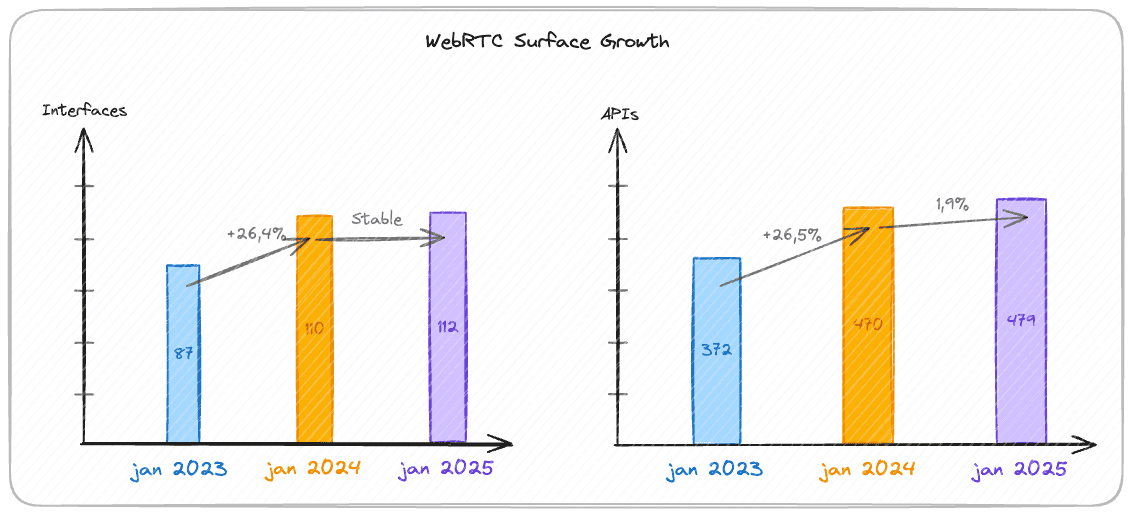

Last year, in this article WebRTC API Landscape in 2024, I noted a significant surge in the number of new interfaces developers had to understand.

Here are the latest statistics for the past year. Things seem different.

The difference is mainly some existing interfaces I added and that were missing. For the API, this difference is the implementation in browsers of some missing APIs that were defined in the specification. A good point.

Note: The count I’ve provided is not an official figure. It’s based on the APIs I chose to include as part of this WebRTC ecosystem.

New areas of work?

Developing WebRTC applications doesn’t mean to use only WebRTC API. Even if its API ecosystem is huge. In most of the cases, we rely on APIs that are close to WebRTC: Web Audio API, Permissions API, etc…

New devices, new technologies bring new APIs that are exposed to Web developers.

And in the past year, I discovered some that seems promising.

WebGPU API

Until now, I haven’t dedicated time to exploring the WebGPU API.

As described on MDN:

“This API enables web developers to use the underlying system’s GPU (Graphics Processing Unit) to carry out high-performance computations and draw complex images that can be rendered in the browser.”

The main reason for my hesitation is that writing shaders—specialized instructions processed by the GPU—requires using WGSL (WebGPU Shading Language), which has similarities to Rust, making it less familiar for many developers.

However, the key advantage of WebGPU is its ability to offload tasks like background replacement to the GPU, ensuring higher performance and smoother framerates. This makes it a promising tool for applications that demand real-time processing and responsiveness.

WebXR API

This is relatively new, with APIs currently available primarily in Chrome and Safari.

The WebXR APIs are designed to handle AR (Augmented Reality) and VR (Virtual Reality) experiences, collectively referred to as XR (Cross Reality). Unlike the older WebVR API, which struggled to manage the growing ecosystem of devices and realities, WebXR is a new standard aimed at supporting any kind of reality, from VR headsets to AR-enabled devices.

However, to use WebXR effectively, you need to understand how WebGPU and WebGL Canvas work. WebXR heavily depends on WebGPU for rendering immersive experiences, making it essential for developers to grasp the fundamentals of GPU-based programming.

WebUSB and WebBluetooth APIs

While not new, the WebUSB and WebBluetooth APIs allow developers to pair and interact with USB and Bluetooth devices directly from the browser.

Combined with the existing WebHID API, these tools significantly expand the ability to interact with external devices. From a vertical perspective, this means developers can connect specialized hardware like cameras or sensors to stream media and data in real-time.

How They Work:

• Both WebUSB and WebBluetooth function similarly. They require explicit user permission through a native browser popup that prompts the user to grant access to a “recognized” device. This ensures a secure and privacy-focused interaction.

• Automation of device access is not possible. Developers cannot retrieve a list of USB or Bluetooth devices programmatically. Instead, users must manually grant access to each device.

For those hoping to leverage these APIs to better identify devices in a WebRTC application, this won’t solve the problem as you can’t retrieve the list of devices. You’ll still need to rely on device labels for distinguishing them…

Window Management API

When working, many of us prefer using multiple screens whenever possible. The goal of this API is to enable seamless multi-screen experiences by allowing web applications to:

Detect how many screens are connected.

Retrieve detailed information about available screens to optimize usage.

Detect when screens are added or removed dynamically.

Place a window on a specific screen (this is particularly handy for cases like ensuring your WebRTC application doesn’t open an incoming call popup on the wrong screen!).

You can review the full specification here Window Management.

Notable changes in browsers

Here are some changes I saw recently or that will come soon.

Chrome M131

Nothing interesting regarding WebRTC in term or new features.

Chrome M132

Chrome 132 made up for it with some significant updates:

Captured Surface Control API : This API provides a way to forward wheel events and adjust the zoom level of a captured tab. It’s particularly useful for maintaining a seamless experience during screen sharing—allowing users to scroll or zoom within the shared content without needing to stay in the shared application.

Window Management : As mentioned earlier, this API supports multi-screen setups, letting applications detect connected screens, manage screen additions/removals, and position windows on specific screens.

Chrome M133

Looking ahead, Chrome 133, expected to be released soon, promises the following features:

Freezing on Energy Saver : To maximize battery life, Chrome will pause background tabs that have been idle for a while and are consuming significant battery. However, exceptions will be made for tabs that provide audio or video conferencing functionality. This is determined based on active audio/video devices, peer connections with live tracks, or open data channels.

Camera Effect Status: Metadata from VideoFrame will now include information about whether an effect (e.g., background blur or replacement) has already been applied to the video frame, providing developers more control over processing pipelines.

setDefaultSinkId: This new method (behind a flag) will allow developers to define a default audio output device (speaker) for the top frame and all its subframes (iFrames), improving flexibility for multi-frame applications.

Chrome M134

In Chrome 134, the following updates are expected

Removal of Non-Standard goog- Constraints: The old, non-standard goog- constraints for getUserMedia will be removed. While this change won’t trigger errors, the browser will fall back to default audio constraints instead, ensuring a smoother transition for applications relying on these outdated constraints.

WebSpeech API Integration with MediaStreamTrack: The WebSpeech API will gain support for MediaStreamTrack. This means you’ll be able to provide any inbound WebRTC stream (e.g., remote audio from a call) for speech recognition or captioning, rather than being limited to the default microphone input. This expands the versatility of speech recognition, particularly for applications like transcription or accessibility enhancements.

Firefox 132

Firefox 132 focused primarily on API support updates, including enhancements for WebRTC components:

RTCRtpReceiver: Added support for getParameters(), enabling retrieval of the parameters for incoming RTP streams.

RTCRtpSender: Introduced canInsertDTMF, which checks if DTMF (Dual-Tone Multi-Frequency) signals can be sent.

MediaStreamTrack: Added getCapabilities(), allowing developers to query the capabilities of a given media track.

Firefox 133

Firefox 133 introduced significant additions around WebCodec, with the inclusion of three new interfaces:

- ImageDecoder: Facilitates efficient decoding of image files.

- ImageTrack: Represents a single track in an image sequence.

- ImageTrackList: A collection of image tracks, useful for handling sequences of images (e.g., animated content).

Firefox 134

Firefox 134 brought support for VP8 Simulcast in Screen Sharing, enabling the use of multiple layers in VP8 encoding during screen sharing sessions. However, the practical use cases for this remain unclear, as managing a low-resolution layer for screen content might not be straightforward or particularly beneficial in most scenarios.

Unfortunately, the release notes for upcoming Firefox versions (with the next one due in just a few days) remain unavailable, leaving us guessing about future updates.

Safari 18.0

Safari 18.0 introduced several notable updates, addressing key gaps in WebRTC and other areas:

getStats API Enhancements: A wide range of previously missing statistics were added to the getStats API, bringing Safari closer to parity with other browsers in terms of WebRTC debugging and performance monitoring.

MediaStreamTrack Processing in Web Workers: This version introduced support for processing MediaStreamTrack in a Web Worker, enabling offloading of complex media tasks from the main thread, improving performance for real-time applications.

WebXR Support for Vision Pro: Safari 18.0 also debuted WebXR support, tailored specifically for the Vision Pro, Apple’s mixed-reality headset, paving the way for immersive AR/VR experiences.

Safari 18.1 & 18.2

Subsequent minor updates delivered by the end of the year focused solely on bug fixes and stability improvements, with no new major features.

And, as is often the case with Safari, there’s no information on what’s coming next—keeping us in suspense!

In Summary

To wrap up, 2024 was a year where the focus was firmly on improving stability, fixing bugs, and enhancing browser compatibility for WebRTC.

While these updates may seem less flashy, they are critical for building a more reliable and seamless experience for developers and users alike.

As we look ahead, it will be exciting to see what innovations and advancements 2025 has in store for the WebRTC ecosystem.